WHY THIS MATTERS IN BRIEF

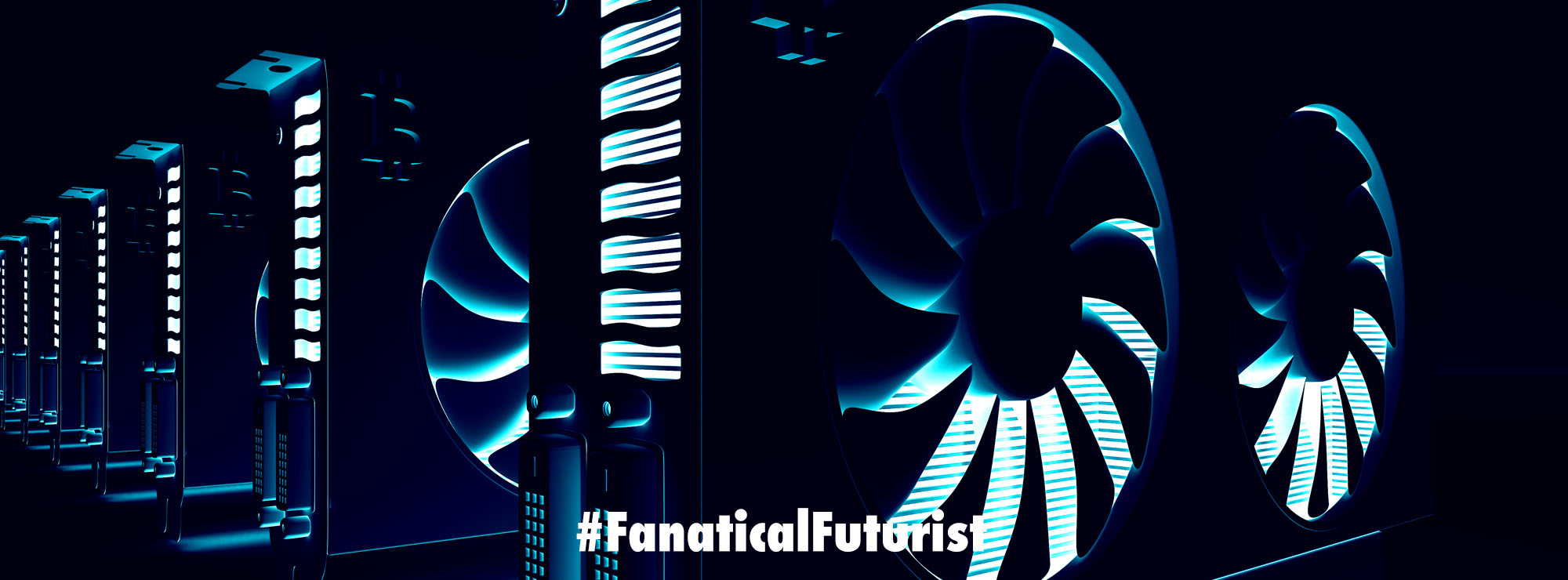

There are still a lot of inefficiencies and bottlenecks in computer chips so this new funding is aimed at creating even better faster AI chips.

Love the Exponential Future? Join our XPotential Community, future proof yourself with courses from XPotential University, read about exponential tech and trends, connect, watch a keynote, or browse my blog.

Love the Exponential Future? Join our XPotential Community, future proof yourself with courses from XPotential University, read about exponential tech and trends, connect, watch a keynote, or browse my blog.

As companies look to accelerate every part of the Artificial Intelligence (AI) development pipeline wafer-scale chip designer Cerebras Systems – who makes dinner sized AI chips and some of the world’s fastest AI supercomputers – and Canadian silicon photonics firm Ranovus have been awarded a $45 million contract from DARPA to reduce AI-related compute bottleneck challenges.

In a statement announcing the project, the two companies said they will “solve the communication bottleneck” by integrating Ranovus’ advanced co-packaged optics interconnects with Cerebras wafer-sized semiconductors.

Under the terms of the initiative, Cerebras and Ranovus are expected to deliver “the industry-first wafer-scale photonic interconnect solution” that would enable “compute performance impossible to achieve today” using a “fraction of the power consumed by GPUs tied together with traditional switches.”

The Future of AI and AI Compute, by Keynote Speaker Matthew Griffin

The project will support DARPA’s Digital RF Battlespace Emulator (DRBE) program, which explores novel computing architectures to create a “new breed” of high-performance computing, dubbed “Real Time HPC,” which will boost computational throughput whilst maintaining extremely low latency.

Cerebras is also involved with the DRBE program and is currently executing the third phase by delivering a leading-edge RF emulation supercomputer.

“By combining wafer scale technology and co-packaged optics interconnects, Cerebras will deliver a platform capable of real-time, high-fidelity simulations for the most challenging physical environment simulations and the largest scale AI workloads, pushing the boundaries of what is possible in AI and in high performance computing and AI,” said Andrew Feldman, co-founder and CEO of Cerebras.

Cerebras Systems develops wafer-scale chips, with its Wafer Scale Engine 3 boasting four trillion transistors and 900,000 ‘AI cores,’ alongside 44GB of on-chip SRAM. Sold as part of the CS-3 system, the company claims the chip is capable of 125 peak AI petaflops.

In August 2024, Cerebras confidentially filed for an IPO with the SEC – although share price and expected market cap have yet to be revealed.