WHY THIS MATTERS IN BRIEF

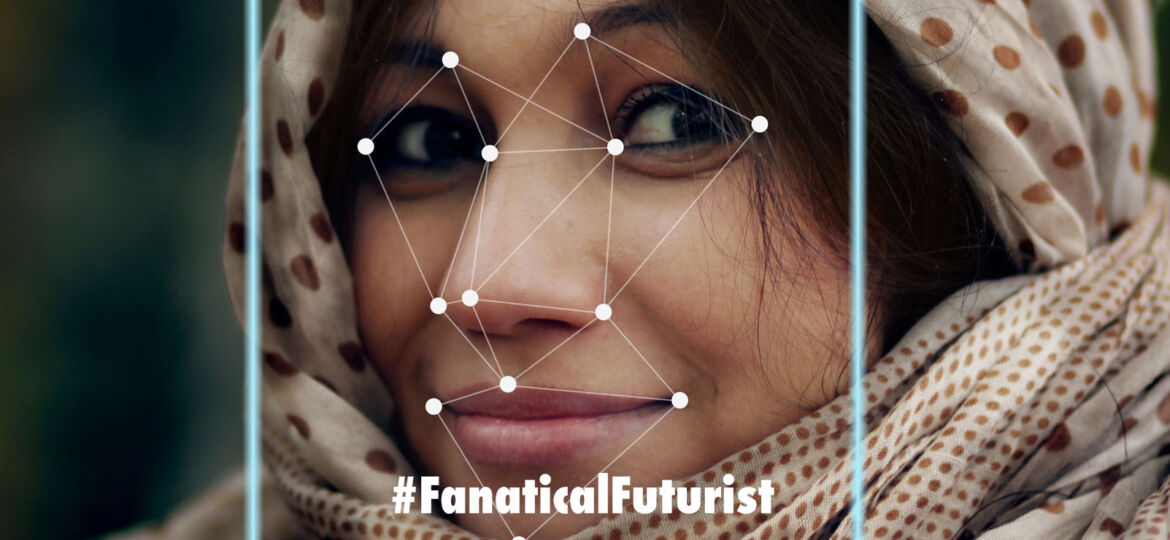

Privacy is becoming a battleground, and just as we can use AI to identify people we can also use it to anonymise people.

Interested in the Exponential Future? Connect, download a free E-Book, watch a keynote, or browse my blog.

It might sound counter intuitive and perhaps slightly self-destructive, but researchers at the company at the center of so many global privacy scandals today, namely Facebook, says they’ve used research from MIT, that I first talked about a few months ago, to create a new Artificial Intelligence (AI) machine learning system that “de-identifies individuals in video.” In short, they’ve created an AI that anonymises you, protects your privacy, and turns you invisible online so that the companies, like Facebook, that use facial recognition to identify you can no longer identify you. And while startups like D-ID and a number of other companies have already made so called de-identification technology for still images this is the first time researchers have created one that works on video, and in initial tests the teams new method was able to thwart all of the state-of-the-art facial recognition systems it encountered.

Furthermore, as an added bonus the system doesn’t need to be retrained every time it sees a new video in order to be effective, and it works by mapping a slightly distorted image onto a person’s face in order to make it difficult for facial recognition technology to identify a person.

“Face recognition can lead to loss of privacy and ‘face replacement technology’ [such as DeepFakes] may be misused to create misleading videos,” a paper explaining the approach reads. “Recent world events concerning the advances in, and abuse of facial recognition technology invoke the need to understand methods that successfully deal with de-identification. Our contribution is the only one suitable for video, including live video, and presents quality that far surpasses the literature methods.”

Facebook’s system pairs an adversarial AI autoencoder with a classifier network, and as part of its training the researchers tried to fool a mixture of facial recognition networks, said Facebook AI Research engineer and Tel Aviv University professor Lior Wolf.

“So the autoencoder tries to make life harder for the facial recognition networks, and it’s actually a general technique that can also be used if you want to create a system that masks any other type of biometric information, for example, someone’s voice or online behaviour, or any other type of identifiable information that you want to remove,” he added.

Like faceswap deepfake software, the AI uses an encoder-decoder architecture to generate both a mask and an image. During training, the person’s face is distorted then fed into the network, and then the system generates distorted and undistorted images of their face and creates an output that can be overlaid onto the video.

At the moment though=, as grand as all this might sound, Facebook has no plans to roll the technology out, said a company spokesperson, but such methods could enable public speech that remains recognisable to people while at the same time helping those people remain “anonymous.”

Anonymised faces in videos could also be used for the privacy-conscious training of AI systems. In May, for example, Google used Mannequin Challenge videos to train AI systems in order to improve video depth perception systems, and elsewhere UC Berkeley researchers have been training their AI agents to dance like people or do backflips by using YouTube videos as a training data set.

Facebook’s desire to be a leader in this area though might also stem from controversy about its platforms being used to spread misinformation and its own applications of facial recognition technology, but whatever their motivation, it’s an exciting experiment that goes to show that when it comes to our loosing our privacy in the future it might not be all one sided.