WHY THIS MATTERS IN BRIEF

As AI’s that rely on neural networks become more integrated into our global economy, and the digital fabric of our world, people are concerned that noone can explain how they do what they do, but Nvidia thinks it might have an answer…

A Deep Learning system’s ability to teach itself new tricks and skills is a strength because the machine gets better with experience, but it’s also a weakness, because it doesn’t have any code that an engineer can tune, tweak – or debug. In every sense of the word it’s a black box, and while there are companies like Google trying to develop AI kill switches the fact remains that as long as they remain black boxes noone can guarantee they’ll be able to turn them off if there’s a problem.

That’s one of the reasons why, for example, the creators of Google DeepMind’s AlphaGo champion couldn’t explain how it played the game of Go, let alone explain how it ended up mastering the game and beating the world champion, and why experts at Elon Musk’s OpenAI outfit couldn’t explain why, or how, their AI just “spontaneously evolved” to teach itself new things.

In some cases the black box nature of these AI’s is fine, but as they increasingly get plugged into more and more of our world’s digital fabric, to run everything from the stock market to administer critical care in hospitals being able to get them to explain their “thinking” is becoming increasingly critical, and not just to their creators, but also to the regulators, who let’s face it hate anything that sounds like “Black box.”

Meanwhile, while MIT have kick started several projects trying to get these advanced AI’s to explain their thinking it now turns out that Nvidia are doing the same with their self-driving car AI, and they say they’ve found a simpler way of instilling transparency.

“While the technology lets us build systems that learn to do things we can’t manually program, we can still explain how the systems make decisions,” said Danny Shapiro, Nvidia’s Head of Automotive.

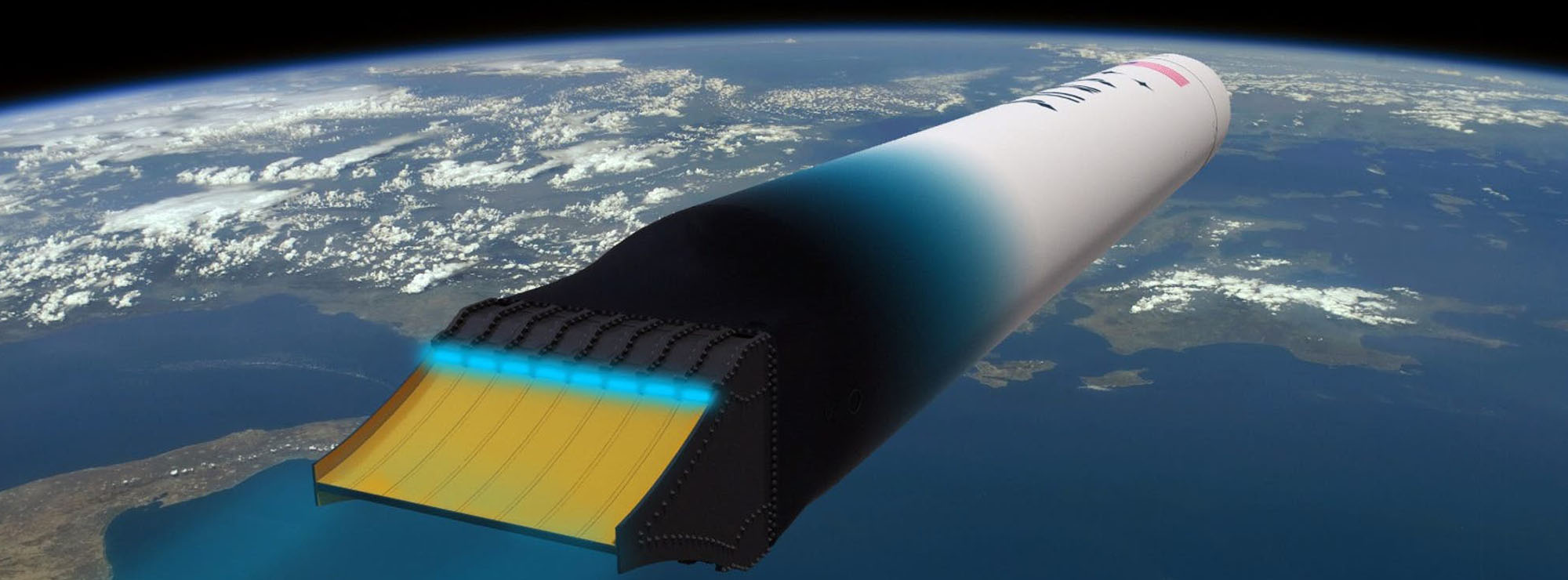

It turns out that because the AI’s processing takes place right inside the layers of processing arrays that make up a neural network the results can be displayed in real time, as a “visualization mask” that’s superimposed on the image coming straight from, in this case, the car’s forward looking camera. So far, the tests Nvidia have run involve the machine turning the steering wheel to keep the car within its lane.

Nvidia’s new method works by taking the analytical output from a high layer in the network – one that has already extracted important features from the image fed in by a camera. It then superimposes that output onto lower layers, averages it, then superimposes it on still lower layers until getting all the way to the original camera image.

The result is a camera image on which the AI’s opinion of what’s significant is highlighted. And, in fact, those parts turn out to be just what a human driver would consider significant, such as lane markings, road edges, parked vehicles, hedges alongside the route, and so on. But, just to make sure that these features really were key to their AI’s decision making, the researchers classified all the pixels into two classes – Class 1 which contains “salient” features that clearly have to do with driving decisions, and Class 2, which contains “non-salient” features, that are typically in the background. The researchers then manipulated the two classes digitally and found that only salient features mattered.

“Shifting the salient objects results in a linear change in steering angle that is nearly as large as that which occurs when we shift the entire image,” said Shapiro, “shifting just the background pixels has a much smaller effect on the steering angle.”

So while it’s true though that the engineers can’t reach into the system to fix a bug, as and when they find one, because deep neural nets don’t have code, we may at least have found a new way to get them, in part at least, explain their thinking. It’s just a shame we can’t use this same technique on people… dang it.

Yes that sounds like we may have an audit trail and perpetual auditing of the neural changes that you don’t have code for. However insignificant the data is that was not utilized, to discard it as none influential may in fact be short sighted as the AI’s determination of what is not needed to make decisions is or should be perpetually learned as well. It defines the environment in which AI exists at the time, and it too is evolving.

So, never too much data when you are trying to emulate the brain…