WHY THIS MATTERS IN BRIEF

You might think that seeing around corners is impossible, but if you can capture photons and apply a bit of techno-magic it becomes possible.

Interested in the Exponential Future? Connect, download a free E-Book, watch a keynote, or browse my blog.

Interested in the Exponential Future? Connect, download a free E-Book, watch a keynote, or browse my blog.

Your eyes are so sensitive that they can see individual photons, and a while ago researchers in both China and the US developed new highly sensitive photon detectors that, literally, let them take photos and see around corners.

Now, building on that work, researchers have demonstrated a new depth sensing 3D camera that can detect single photons of light at megapixel resolution and 24,000 frames per second, both of which are world records for the technology whose incredible capabilities could help build the next generation of vision systems for autonomous vehicles and let them see everything around them, whether it’s in plain sight or hidden around the next corner.

Designed by researchers at EPFL Switzerland, in conjunction with Canon, the MegaX camera is “the culmination of over 15 years of research on single photon avalanche diodes (SPADs), which are photodetectors used in next-generation optical sensor technology,” according to EPFL professor Edoardo Charbon, lead researcher on the project.

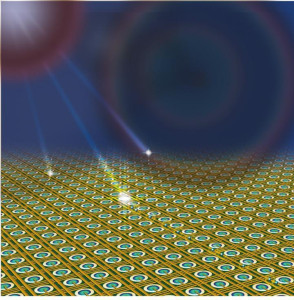

The camera in question

SPAD sensors are capable of detecting the tiniest possible quanta of light thanks to an avalanche effect created when electrons are accelerated to high speeds using high voltages. A high enough kinetic energy can shake nearby electrons free as well, meaning that a single photon triggering a single electron release can snowball exponentially into a readable signal.

These are the sensors that power LiDAR, PET scanners, and Time of Flight sensors, which bounce lasers off a target and measure how long the light’s round-trip took to develop 3D maps of the object in space – a trait that Samsung, for example, are using to help people automatically turn their home videos into Virtual Reality (VR) worlds.

Up until now these have been low resolution sensors, either a single pixel or arrays of up to 1,000 pixels, meaning that larger objects have had to be scanned horizontally, vertically or both, and this creates a trade off: Do you want accurate, high precision scanning or fast frame rates?

The new MegaX camera offers a huge advance on both, with million-pixel resolution and a sensor so fast it can smash out 24,000 frames per second, representing a data rate of 25 Gigabytes per second. It’s incredibly sensitive, capable of detecting single photons, offers “unprecedented” dynamic range – the range of shades of brightness between the darkest part of an image and the brightest – and its shutter can open for as little as 3.8 nanoseconds, or just under four billionths of a second.

The researchers were able to demonstrate that the MegaX can also do things other sensors can’t, like obtain good depth readings on objects through partially transparent glass.

It does its magic using extremely small SPAD pixels designed to “quench” the avalanche signal almost immediately upon its creation, refreshing the sensor for the next frame. This leads to another highly desirable attribute in very low power consumption, which will come in very handy when this technology matures to the point where it can run in smartphones and automotive LiDAR systems. It could also play some part in future quantum communication, sensing and computing systems.

It’s not there yet, though. The pixels are still individually too big, at 9 µm they’re about 10 times larger than a regular camera pixel. But Charbon and the team are already working on a MegaX successor that brings that down to around 2.2 µm.

The research was published in Optica.

Sources: EPFL, EurekAlert