WHY THIS MATTERS IN BRIEF

There are increasingly few areas of work and society that technology isn’t influencing, and even stuntmen aren’t immune.

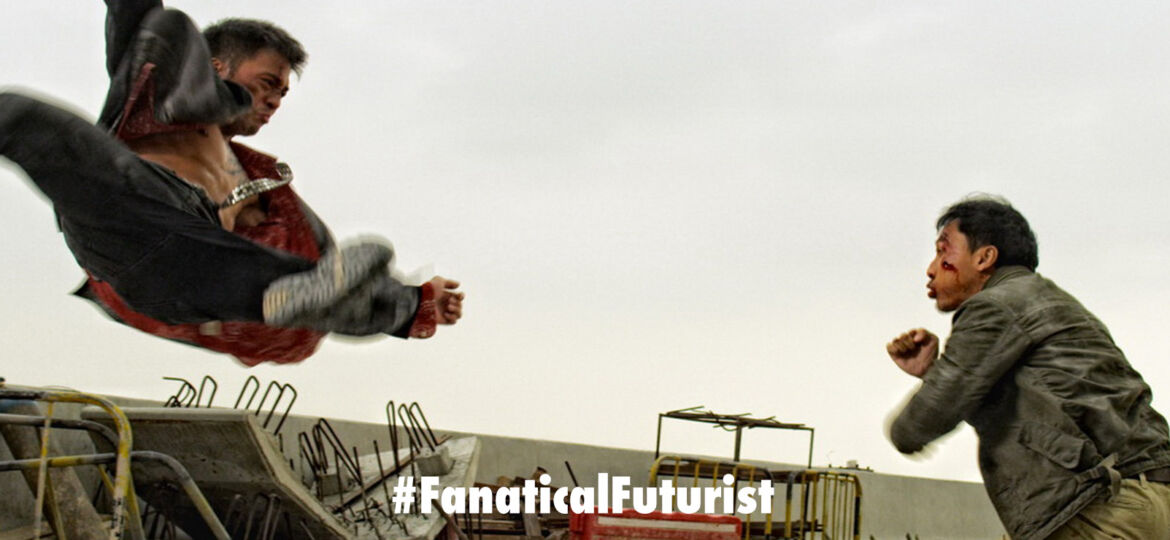

A new Artificial Intelligence (AI) system has been used to create computer animated stuntmen that could make action movies cooler than before. Researchers at University of California, Berkeley have developed a system capable of recreating some of the slickest moves in martial arts, with the potential side effect of being able to replace real life human actors, by combining it with other technologies like those from the Deep Fake stable including hi def rendering, lifelike voices and much more. UC Berkeley graduate student Xue Bin ‘Jason’ Peng, who was involved in the project, says the technology “results in movements that are tough to separate from those of humans.”

“This is actually a pretty big leap from what has been done with deep learning and animation so far,” Peng said in a statement released with his research that was presented at the 2018 SIGGRAPH conference in in Vancouver, Canada.

“In the past, a lot of work has gone into simulating natural [human] motions, but these physics based methods tend to be very specialised, they’re not general methods that can handle a large variety of skills. If you compare our results to motion capture recorded from humans, we are getting to the point where it is pretty difficult to distinguish the two, to tell what is simulation and what is real. We’re moving toward a virtual stuntman,” he said.

A paper on the project, dubbed DeepMimic, was published in the journal ACM Trans Graph and recently the team made its code and motion capture data available on GitHub for others to try.

The team used deep reinforcement learning techniques to teach the system how to move. It took motion capture data from real life performances, fed them into the system and set it to practice the moves in a simulation for the equivalent of a whole month, training 24 hours per day. DeepMimic learned 25 different moves like kicking and backflips, comparing its results each time to see how close it came to the original mocap data.

Unlike other systems that may have tried and failed repeatedly, DeepMimic broke down the move into steps so if it failed at one point, it could analyse its performance and tweak at the right moment accordingly.

“As these techniques progress, I think they will start to play a larger and larger role in movies,” said Peng. “However since movies are generally not interactive, these simulation techniques might have more immediate impact on games and VR.”

“In fact, simulated character trained using [reinforcement learning] are already finding their way to games. Indie games could be a very nice testing ground for these ideas. But it might take a while longer before they are ready for AAA titles, since working with simulated characters do require a pretty drastic shift from traditional development pipelines,” he added. And game developers are already starting to experiment with these tools. One developer managed to use DeepMimic inside the Unity game engine…

Ladies and Gentlemen, we have completed the Backflip! Congrats to Ringo, aka StyleTransfer002.144 – using #unity3d + #MLAgents & #MarathonEnvs. StyleTransfer trains an #ActiveRagoll from MoCap data aka Deepmimic https://t.co/gAGtYYeawE … #madewithunity pic.twitter.com/3iIoDsfdLe

— Joe Booth (@iAmVidyaGamer) November 1, 2018

Peng is hopeful that releasing the code will speed up its adoption. He also notes that the team has “been speaking to a number of game developers and animation studios about possible applications of this work, though I can’t go into too much details about that yet,” he said.

Source: UC Berkely