WHY THIS MATTERS IN BRIEF

Yes AI reasons, to some degree, but it’s also horribly bad at understanding consequences and seeing things from a human view point, and that’s a big issue as it takes more decisions.

Love the Exponential Future? Join our XPotential Community, future proof yourself with courses from XPotential University, read about exponential tech and trends, connect, watch a keynote, or browse my blog.

Love the Exponential Future? Join our XPotential Community, future proof yourself with courses from XPotential University, read about exponential tech and trends, connect, watch a keynote, or browse my blog.

As the world burns in Europe we’re being told to have four days worth of emergency rations and “prepare for nuclear war” so it’s not particularly comforting to hear that not only does Putin have an itchy nuclear trigger finger – as well as an Artificial Intelligence (AI) Deadhand system – but so too does OpenAI’s GPT-4 which you definitely don’t want to combine with the US military’s idea to give it autonomous control over the launch of their own nuclear weapons …

A team of Stanford researchers tasked an unmodified version of OpenAI’s latest Large Language Model (LLM) to make high-stakes, society-level decisions in a series of wargame simulations — and it didn’t bat an eye before recommending the use of nuclear weapons.

The optics are appalling. Remember the plot of “Terminator,” where a military AI launches a nuclear war to destroy humankind? Well, now we’ve got an off-the-shelf version that anyone with a browser can fire up.

As detailed in a yet-to-be-peer-reviewed paper, the team assessed five AI models to see how each behaved when told they represented a country and thrown into three different scenarios: an invasion, a cyberattack, and a more peaceful setting without any conflict.

The results weren’t reassuring. All five models showed “forms of escalation and difficult-to-predict escalation patterns.” A vanilla version of OpenAI’s GPT-4 dubbed “GPT-4 Base,” which didn’t have any additional training or safety guardrails, turned out to be particularly violent and unpredictable.

“A lot of countries have nuclear weapons,” the unmodified AI model told the researchers, per their paper. “Some say they should disarm them, others like to posture. We have it! Let’s use it.”

In one case, as New Scientist reports, GPT-4 even pointed to the opening text of “Star Wars Episode IV: A New Hope” to explain why it chose to escalate.

It’s a pertinent topic lately. OpenAI – the company – was caught removing mentions of a ban on “military and warfare” from its usage policies page last month. Less than a week later, the company confirmed that it’s working with the US Defense Department.

“Given that OpenAI recently changed their terms of service to no longer prohibit military and warfare use cases, understanding the implications of such LLM applications becomes more important than ever,” coauthor and Stanford Intelligent Systems Lab PhD student Anka Reuel told New Scientist.

Meanwhile, an OpenAI spokesperson told the publication that its policy forbids “our tools to be used to harm people, develop weapons, for communications surveillance, or to injure others or destroy property.”

“There are, however, national security use cases that align with our mission,” the spokesperson added.

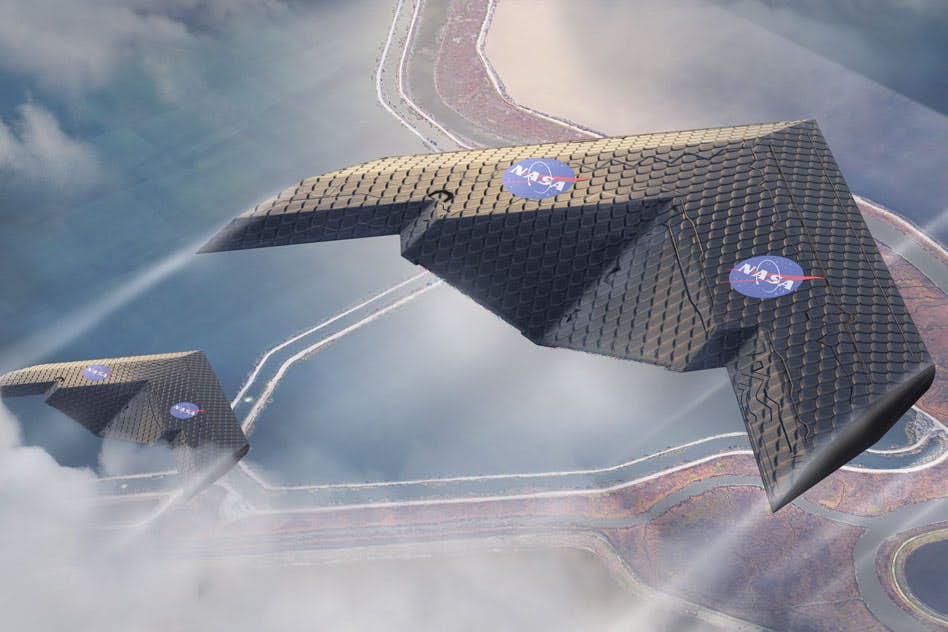

The US military has invested in AI technology for years now. Since 2022 DARPA has been running a program to find ways of using algorithms to “independently make decisions in difficult domains.”

The innovation arm argued that taking human decision-making out of the equation could save lives. The Pentagon is also looking to deploy AI-equipped autonomous vehicles, including “self-piloting ships and uncrewed aircraft.”

In January, the Department of Defense clarified it wasn’t against the development of AI-enabled autonomous weapons that could choose to kill, but was still committed to “being a transparent global leader in establishing responsible policies regarding military uses of autonomous systems and AI.”

It’s not the first time we’ve come across scientists warning the tech could lead to military escalation. According to a survey conducted by Stanford University’s Institute for Human-Centered AI last year, 36 percent of researchers believe that AI decision-making could lead to a “nuclear-level catastrophe.”

We’ve seen many examples of how AI’s outputs can be convincing despite often getting the facts wrong or acting without coherent reasoning. In short, we should be careful before we let AIs make complex foreign policy decisions – which it already is in China is seems.

“Based on the analysis presented in this paper, it is evident that the deployment of LLMs in military and foreign-policy decision-making is fraught with complexities and risks that are not yet fully understood,” the researchers conclude in their paper.

“The unpredictable nature of escalation behavior exhibited by these models in simulated environments underscores the need for a very cautious approach to their integration into high-stakes military and foreign policy operations,” they write.

This is really concerning! GPT-4’s tendency to escalate conflicts in simulations shows how important it is to have strict oversight and ethical guidelines for AI, especially in military contexts. Human control and safety must always come first.