WHY THIS MATTERS IN BRIEF

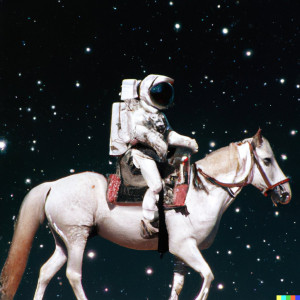

If you told a human artist to create a picture of an astronaut riding a horse they’d be able to do it, but up until now context has been very hard for AI to grasp.

Love the Exponential Future? Join our XPotential Community, future proof yourself with courses from XPotential University, read about exponential tech and trends, connect, watch a keynote, or browse my blog.

Love the Exponential Future? Join our XPotential Community, future proof yourself with courses from XPotential University, read about exponential tech and trends, connect, watch a keynote, or browse my blog.

Jumping straight into it while Artificial Intelligence (AI) is great at dreaming, fighting, imagining, and even creating and spawning new AI’s and multi-tasking it’s rubbish at understanding the world with it even being beaten by toddlers. Well, that used to be the case anyway. Now, after a boost it’s jus gotten a lot better.

When OpenAI revealed its picture-making neural network DALL-E in early 2021, the program’s human-like ability to combine different concepts in new ways was striking. The string of synthetic images that DALL-E produced on demand were surreal and cartoonish, but they showed that the AI had learned key lessons about how the world fits together. DALL-E’s avocado armchairs had the essential features of both avocados and chairs; its dog-walking daikons in tutus wore the tutus around their waists and held the dogs’ leashes in their hands.

The Future of Synthetic Media and Deepfakes, by Keynote Speaker Matthew Griffin

Last week the San Francisco-based lab announced DALL-E’s successor, DALL-E 2. It produces much better images, is easier to use, and – unlike the original version – will be released to the public. DALL-E 2 may even stretch current definitions of AI, forcing us to examine that concept and decide what it really means.

“The leap from DALL-E to DALL-E 2 is reminiscent of the leap from GPT-2 to GPT-3,” says Oren Etzioni, CEO at the Allen Institute for Artificial Intelligence (AI2) in Seattle. GPT-3, a neural network with billions of parameters, was also developed by OpenAI.

Some of the images it produced

Image-generation models like DALL-E have come a long way in just a few years. In 2020, AI2 showed off a neural network that could generate images from prompts such as “Three people play video games on a couch.” The results were distorted and blurry, but just about recognizable. Last year, Chinese tech giant Baidu improved on the original DALL-E’s image quality with a model called ERNIE-ViLG.

DALL-E 2 takes the approach even further. Its creations can be stunning: ask it to generate images of astronauts on horses, teddy-bear scientists, or sea otters in the style of Vermeer, and it does so with near photorealism. The examples that OpenAI has made available, as well as those people saw in a demo the company gave last week, will have been cherry-picked. Even so, the quality is often remarkable.

“One way you can think about this neural network is transcendent beauty as a service,” says Ilya Sutskever, cofounder and chief scientist at OpenAI. “Every now and then it generates something that just makes me gasp.”

DALL-E 2’s better performance is down to a complete redesign. The original version was more or less an extension of GPT-3. In many ways, GPT-3 is like a supercharged autocomplete: start it off with a few words or sentences and it carries on by itself, predicting the next several hundred words in the sequence. DALL-E worked in much the same way, but swapped words for pixels. When it received a text prompt, it “completed” that text by predicting the string of pixels that it guessed was most likely to come next, producing an image.

DALL-E 2 is not based on GPT-3. Under the hood, it works in two stages. First, it uses OpenAI’s language-model CLIP, which can pair written descriptions with images, to translate the text prompt into an intermediate form that captures the key characteristics that an image should have to match that prompt (according to CLIP). Second, DALL-E 2 runs a type of neural network known as a diffusion model to generate an image that satisfies CLIP.

Diffusion models are trained on images that have been completely distorted with random pixels. They learn to convert these images back into their original form. In DALL-E 2, there are no existing images. So the diffusion model takes the random pixels and, guided by CLIP, converts it into a brand new image, created from scratch, that matches the text prompt.

The diffusion model allows DALL-E 2 to produce higher-resolution images more quickly than DALL-E. “That makes it vastly more practical and enjoyable to use,” says Aditya Ramesh at OpenAI.

In the demo, Ramesh and his colleagues showed people pictures of a hedgehog using a calculator, a corgi and a panda playing chess, and a cat dressed as Napoleon holding a piece of cheese.

“It’s easy to burn through a whole work day thinking up prompts,” he says.

DALL-E 2 still slips up. For example, it can struggle with a prompt that asks it to combine two or more objects with two or more attributes, such as “A red cube on top of a blue cube.” OpenAI thinks this is because CLIP does not always connect attributes to objects correctly.

As well as riffing off text prompts, DALL-E 2 can spin out variations of existing images. Ramesh plugs in a photo he took of some street art outside his apartment. The AI immediately starts generating alternate versions of the scene with different art on the wall. Each of these new images can be used to kick off their own sequence of variations.

“This feedback loop could be really useful for designers,” says Ramesh.

One early user, an artist called Holly Herndon, says she is using DALL-E 2 to create wall-sized compositions.

“I can stitch together giant artworks piece by piece, like a patchwork tapestry, or narrative journey,” she says. “It feels like working in a new medium.”

DALL-E 2 looks much more like a polished product than the previous version. That wasn’t the aim, says Ramesh. But OpenAI does plan to release DALL-E 2 to the public after an initial rollout to a small group of trusted users, much like it did with GPT-3. (You can sign up for access here.)

GPT-3 can produce toxic text. But OpenAI says it has used the feedback it got from users of GPT-3 to train a safer version, called InstructGPT. The company hopes to follow a similar path with DALL-E 2, which will also be shaped by user feedback. OpenAI will encourage initial users to break the AI, tricking it into generating offensive or harmful images. As it works through these problems, OpenAI will begin to make DALL-E 2 available to a wider group of people.

OpenAI is also releasing a user policy for DALL-E, which forbids asking the AI to generate offensive images—no violence or pornography—and no political images. To prevent deep fakes, users will not be allowed to ask DALL-E to generate images of real people.

As well as the user policy, OpenAI has removed certain types of image from DALL-E 2’s training data, including those showing graphic violence. OpenAI also says it will pay human moderators to review every image generated on its platform.

“Our main aim here is to just get a lot of feedback for the system before we start sharing it more broadly,” says Prafulla Dhariwal at OpenAI. “I hope eventually it will be available, so that developers can build apps on top of it.”

Multi-tasking AIs that can view the world and work with concepts across multiple modalities – like language and vision – are a step towards Artificial General Intelligence (AGI) and DALL-E 2 is one of the best examples yet.

But while Etzioni is impressed with the images that DALL-E 2 produces, he is cautious about what this means for the overall progress of AI.

“This kind of improvement isn’t bringing us any closer to AGI,” he says. “We already know that AI is remarkably capable at solving narrow tasks using deep learning. But it is still humans who formulate these tasks and give deep learning its marching orders.”

For Mark Riedl, an AI researcher at Georgia Tech in Atlanta, creativity is a good way to measure intelligence. Unlike the Turing test, which requires a machine to fool a human through conversation, Riedl’s Lovelace 2.0 test judges a machine’s intelligence according to how well it responds to requests to create something, such as “A penguin on Mars wearing a spacesuit walking a robot dog next to Santa Claus.”

DALL-E scores well on this test. But intelligence is a sliding scale. As we build better and better machines, our tests for intelligence need to adapt. Many chatbots are now very good at mimicking human conversation, passing the Turing test in a narrow sense. They are still mindless, however.

Yet ideas about what we mean by “create” and “understand” change too, says Riedl. “These terms are ill-defined and subject to debate.” A bee understands the significance of yellow because it acts on that information, for example. “If we define understanding as human understanding, then AI systems are very far off,” says Riedl.

“But I would also argue that these art-generation systems have some basic understanding that overlaps with human understanding,” he says. “They can put a tutu on a radish in the same place that a human would put one.”

Like the bee, DALL-E 2 acts on information, producing images that meet human expectations. AIs like DALL-E push us to think about these questions and what we mean by these terms.

OpenAI is clear about where it stands. “Our aim is to create general intelligence,” says Dhariwal. “Building models like DALL-E 2 that connect vision and language is a crucial step in our larger goal of teaching machines to perceive the world the way humans do, and eventually developing AGI.”

[…] their visual world to create AI systems with similar capabilities. In his Fanatical Futurist articleThis astronaut riding a horse shows AI is getting better at creating synthetic content, Matthew Griffin explains that DALL-E 2 uses an OpenAI’s language model that “can pair […]

[…] date, the leader in the field has been DALL-E, a program created by commercial AI lab OpenAI, but last week Google announced its own take on the […]

[…] tools like DALL-E and Midjourney are increasingly part of creative production, and some have started to win awards […]

[…] recent update to ChatGPT Plus added a myriad of new features, including DALL-E image generation and the Code Interpreter, which allows Python code execution and file analysis. […]

[…] protein structures has since taken center stage. These newer algorithms are in the same family as DALL-E and GPT-4 – the algorithm behind ChatGPT – only instead of generating images or written […]

[…] large language models that underlie ChatGPT and algorithms that generate images, such as DALL-E 2. The results were hardly cut-and-dried, with some AI systems meeting a portion of the criteria […]