WHY THIS MATTERS IN BRIEF

We are well on the path to creating a society shaped and guided by digital, and genetic, profiling and that can be a good, and a bad, thing.

Dangerous dystopian tech, or a must have pre-crime tech in an increasingly dangerous world? I’ll let you decide, but for my part I don’t think that you’ll be able to make that judgement as easily as you’d think, and I wouldn’t be surprised if you quickly find yourself spinning round in a conundrum…

In a world where criminals and terrorists could lurk just around the corner more and more companies are trying to come up with new and innovative ways to find and alert the authorities to their whereabouts – before they can wreak their havoc. But in order to do so it seems inevitable that in order to achieve that goal they all have to first cross some big lines.

Firstly say goodbye to online and offline privacy, and let’s face it, in today’s world that’s a given, but secondly, and perhaps the most worrying of the two governments and agencies need to increasingly start putting their faith and trust into new technologies that help them accurately profile an individuals behaviour, character and, most importantly, intent. And that’s a very big line to cross because once you start profiling people you start going down a very long, very dark rabbit hole.

That said though it’s a line that nevertheless, increasingly, if it’s not being crossed, then it’s being stepped on time and time again – whether it’s with new emerging brain scanning technologies that can detect a persons guilt and pull secrets straight out of their heads, new persistent surveillance systems, or new Artificial Intelligence (AI) algorithms and that can flag you as a criminal just by looking at the contours of your face.

Now, hot on the heels of some of these technology developments another company, Israeli based Faception has come up with a method, apparently based on solid scientific facts, that can categorise people’s personalities, and character, based on face structure alone, in real time and it’s hoped that its development will improve the authorities ability to discover anonymous individuals who have malicious intent.

“We’ve developed a system that can, using complex algorithms and big data analysis, identify a human’s character based on his face structure with a high percent success rate,” explained the company’s CEO, Shai Gilboa, “the development is unique also in the sense that it can point out possible threats, based on a person’s character. In quite a simple manner – calculating the person’s location and his character enables to estimate with a high probability the existence of a threat.”

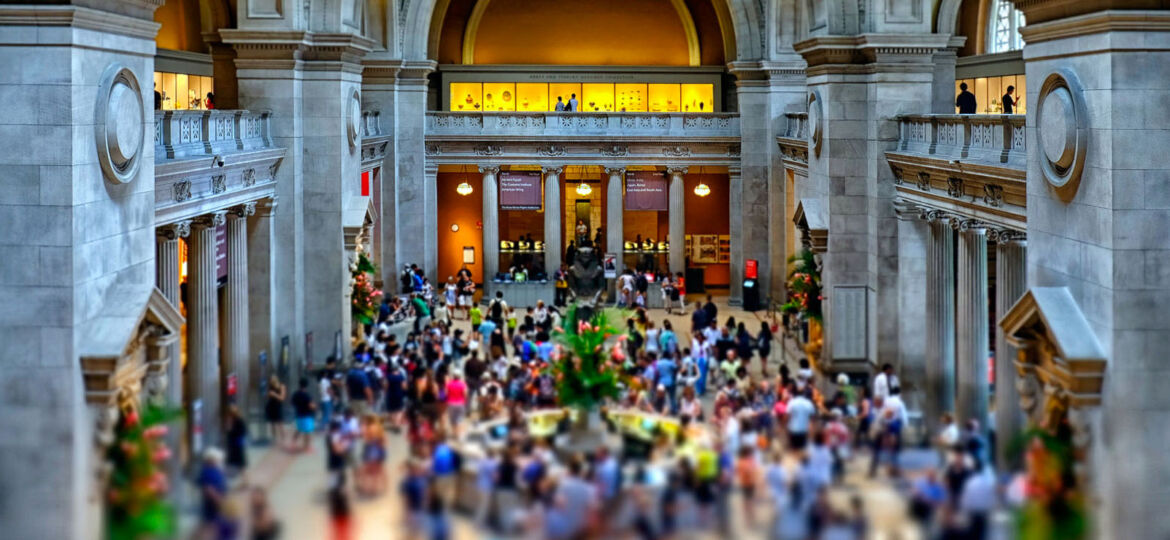

The system, which can be integrated into existing, ordinary security cameras can be placed at the entrance to large events, border security posts at airports and police stations, where the cameras can analyse an individual’s face within milliseconds and pass the analysis onto the authorities, after which the data is erased in accordance with strong privacy and ethics guidelines.

When quizzed about the potential power that the new system could have Gilboa said: “First of all, we’re committed to regulation by countries we work with, besides the fact that we regulate ourselves. The analysis is carried out anonymously and objectively, we also never store data gathered on individuals. We work as service providers in the same way blood test service providers do. In addition, the personal information gathering market is overflowed by players such as Facebook or Google, so that there’s no financial sense in getting into that realm”.

The company also stresses that their domain is brand new and the use cases for thetechnology are almost limitless.

“We’re focusing right now on public security while working with security agencies, we want to lead this personality analysis domain and be an example for companies that will develop technologies in this field in the future,” says Gilboa, “but other applications for the technology in the future [include] personal communication and better coordination between machines and humans. Once a robot understands from a human what his character is like, he can optimise the method in which to refer to him. Other applications include fintech, communication between a human driver and an autonomous one, spotting suitable employees in manpower companies and fitting relevant commercial content to customers.”

“It’s a very wide realm, if we continue improving and developing as we’ve done over the last years, this might be quite a revolution,” concludes Gilboa.

Over the course of the next ten years we will inevitably see more and more of these emerging technology fields and solutions converge, and at that point we could be well on our way to creating truly viable pre-crime solutions, or be in a dystopian world.

Extending this technology one step further, imagine having this technology, and others like it, used on you when you’re applying for a car loan, mortgage, or passport – do you think you would pass? And just what would you be “passing”? Increasingly your future will be determined by invisible, potentially unaccountable, black box algorithms.

By the way, have you decided yet?

this smarts of the failed Google Glass that broke privacy laws let alone ethics

There is also the huge question on where all the images go from self drive car cameras eg Tesla’s as these go back to their Cloud AI engine

Facial personality profiling, correlated with facial recognition, online activity, and video/voice analysis, can indeed turn incredibly powerful and probably very accurate at some point. Ironically, the ‘character’ of the people/company in control of such capability will determine if its power will be channeled for good or not.

Technology or not, your character today is your destiny tomorrow. The major difference is time-to-destiny.

Seems interesting – but isn’t this crossing the privacy/discrimation line? What happens if this profiling occurs on a day just received bad news? example ‘death in the family’

Companies want to use AI for all sorts of things. I don’t want to be profiled by AI based on one photo, and hopefully GDPR will give me some measure of protection.

Interesting. Would love to see how I fare since my wife is always telling me I have the male version of “resting bitch face”

Thanks so much for highlighting this innovation. Will read more. This is an interesting idea, may be achievable in near future. Fingerprint and other similar biometric features are still not full proof. Voice tech has reached a maturity but image is yet to reach that state. Google, Microsoft, IBM and others are in this sector to give more insights about individual images but persona would be great.