WHY THIS MATTERS IN BRIEF

- Artificial intelligence workloads and learning need huge amounts of computing power, and chip manufacturers are waring to lead the new revolution

There’s an artificial intelligence (AI) arms race going on. But I’m not talking about a software arms race – although that’s in full swing – I’m talking about an arms race to create the next generation of processors that will dominate the future of AI workloads.

Over the past year Intel has spent over $32 Billion acquiring companies like Altera, Movidus, Nervana and more recently Mobileye, Nvidia has staked its future on deep learning with its latest Tesla GPU’s and DGX-1 AI supercomputer in a box and Qualcomm, well, they just want to be at the center of everything.

Now, a company that arguably is at the center of everything, ARM, which was recently bought itself by the Softbank Group for a cool $31 Billion, and whose CEO, Masayoshi Son, recently went on record to suggest that by 2047 the chip in our shoes will have an IQ of over 10,000, has announced its own entry into the space.

ARM has, of course, been playing around with AI architectures and designs for some time now but their latest announcement, a new micro-architecture called DynamIQ, is aimed squarely at capturing and owning industry scale AI computing tasks and addressing the legacy needs of manufacturers and providers who, despite racing into the future still need to buy and support a wide variety of CPU frameworks.

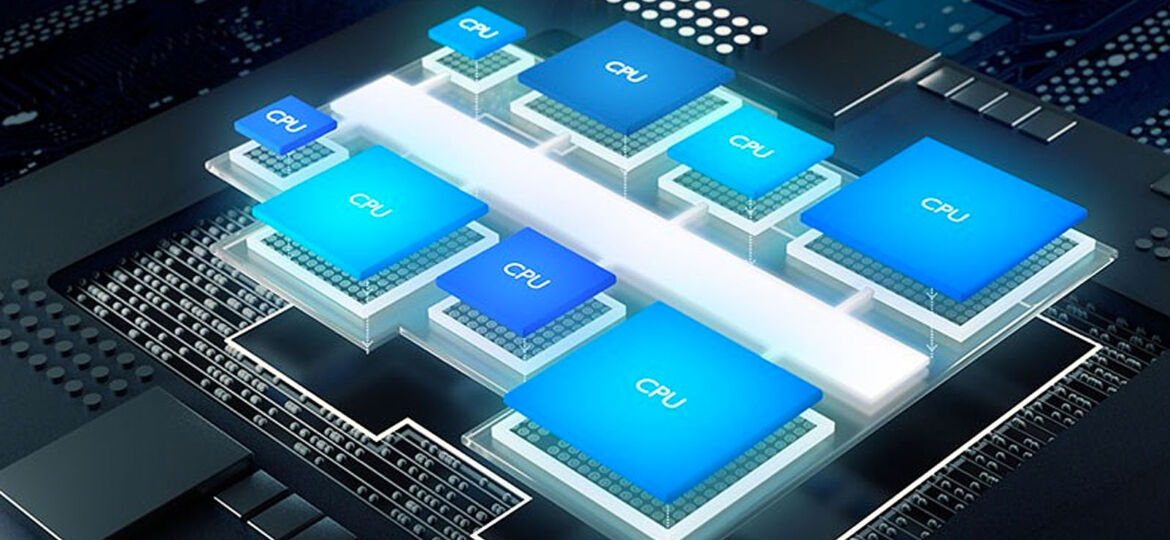

ARM believes that the introduction of DynamIQ represents a ‘monumental shift’ in the evolution of multi-core microarchitecture, and for the possibilities it brings to ARM’s existing Cortex-A processor offerings.

DynamIQ offers a new core schema that permits variegated CPUs to co-exist in memory subsystem, which it claims improves latency and responsiveness for active applications in a multi-platform environment.

The new design also has fine grained power management tools in order to help power hungry applications run as efficiently as possible, and in addition to that users can control CPU speeds, benefit from speedier power-switching modes, such as toggling between on, off and sleep, and enable partial memory sub-system power downs. In short it’s packed with a toolbox that’s ready to tackle environmental and sustainability concerns head on.

“It’s a step change in how we build CPUs and the way we stitch CPUs together… It’ll be in smartphones and tablets, for sure, but also automotive networking and a whole range of other embedded devices. Anywhere a Cortex processor is used today, Dynamiq is going to be the next step forward,” said ARM’s product marketing head John Ronco.

DynamIQ also draws on ARM’s big.LITTLE framework, where higher level CPUs are paired with less energy demanding, lower yield chips, and now using DynamiIQ customers will be able to connect up to eight varying CPU types in any configuration, under the banner ‘heterogeneous computing’ – effectively offering itself as a Rosetta stone for legacy architecture in the new AI gold rush.

DynamIQ, which according to the company, is already being licensed to a variety of companies, and expected to hit the market in 2018, can use these ancillary processors to serve low grade demands, only activating the higher powered CPUs for those requests which require the extra computing power.

The new framework is also extensible enough to allow dedicated solutions which need on-chip control of CPU groups, effectively allowing manufacturers to embed AI accelerators directly into their hardware.

Cortex-A processors under DynamIQ, for example, will be able scale multi-core configurations up to an 8-core limit, with fine control over the set-up of each processor, and configurability of power characteristics and performance profiles. It also brings new processor instructions which will lend new Cortex-A CPUs a fifty fold increase in the performance of AI related tasks over the next five years, relative to current benchmarks for Cortex-A73 systems.

The AI arms race has just heated up, again, and soon it’s going to get white hot.