WHY THIS MATTERS IN BRIEF

AIs are full of vulnerabilities and this is yet another example of how their guardrails can be broken and hijacked to inflict damage and harm on others.

Love the Exponential Future? Join our XPotential Community, future proof yourself with courses from XPotential University, read about exponential tech and trends, connect, watch a keynote, or browse my blog.

Love the Exponential Future? Join our XPotential Community, future proof yourself with courses from XPotential University, read about exponential tech and trends, connect, watch a keynote, or browse my blog.

In what seems to be a first of a kind attack Chinese state sponsored hackers have apparently hijacked an Artificial Intelligence (AI) model from Anthropic and surreptitiously used it to automate break-ins of major corporations and foreign governments during a recent hacking campaign, the company said Thursday.

The effort focused on dozens of targets and involved a level of automation that Anthropic’s cybersecurity investigators had not previously seen, according to Jacob Klein, the company’s head of threat intelligence.

Hackers have been using AI for years now to conduct individual tasks such as crafting phishing emails or scanning the internet for vulnerable systems, but in this instance 80% to 90% of the attack was fully automated, with humans only intervening in a handful of decision points, Klein said.

The Future of Cyber Security 2030, by Cyber Expert Matthew Griffin

The hackers conducted their attacks “literally with the click of a button, and then with minimal human interaction,” Klein said. Anthropic disrupted the campaigns and blocked the hackers’ accounts, but not before as many as four intrusions were successful. In one case, the hackers directed Anthropic’s Claude AI tools to query internal databases and extract data independently.

“The human was only involved in a few critical chokepoints, saying, ‘Yes, continue,’ ‘Don’t continue,’ ‘Thank you for this information,’ ‘Oh, that doesn’t look right, Claude, are you sure?’”

Stitching together hacking tasks into nearly autonomous attacks is a new step in a growing trend of fully autonomous cyber warfare that is giving hackers additional scale and speed.

This summer, the cybersecurity firm Volexity spotted China backed hackers using AI tools to automate parts of a hacking campaign against corporations, research institutions and nongovernmental agencies. The hackers were using large language models to determine who they should target, how to craft their phishing emails and how to write the malicious software they used to infect their victims, said Steven Adair, Volexity’s president. “AI is empowering the threat actor to do more, quicker,” he said.

Last week, Google reported that hackers linked to the Russian government attacked Ukraine using an AI model to generate customized malware instructions in real time.

US government officials have been warning for years that China is targeting US AI-technology in an attempt to hack into US companies and government agencies and steal data.

A spokesman for the Chinese Embassy in Washington said that tracing cyberattacks is complex and accused the US of using cybersecurity to “smear and slander” China.

“China firmly opposes and cracks down on all forms of cyberattacks,” he said.

Anthropic didn’t disclose which corporations and governments the hackers tried to compromise, but said it had detected roughly 30 targets. The handful of successful hacks managed in some cases to steal sensitive information. The company said the US government wasn’t among the victims of a successful intrusion, but wouldn’t comment on whether any part of the US government was one of the targets.

Anthropic said it was confident, based on the digital infrastructure the hackers used as well as other clues, that the attacks were run by Chinese state-backed hackers.

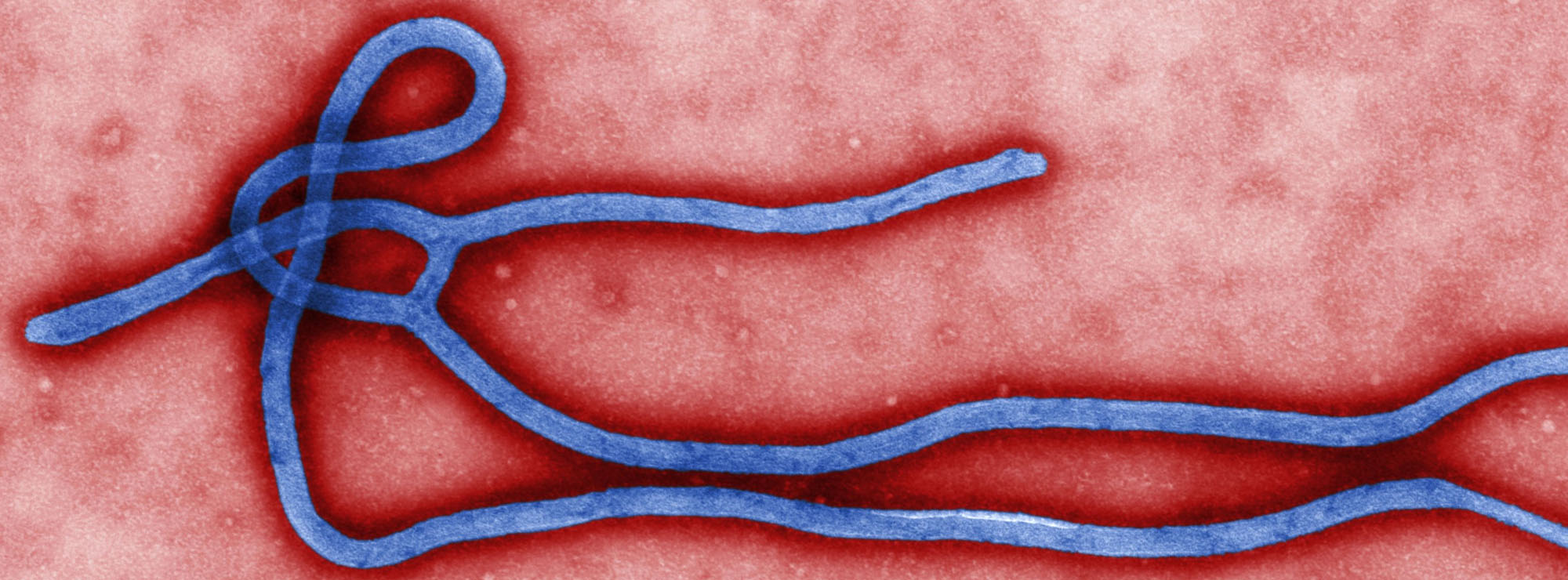

Hackers often use open-source AI tools to conduct their hacking because open-source code is available free of charge and can be modified to remove restrictions against malicious activity. But to use Claude to conduct the attacks, the China-linked hackers had to sidestep Anthropic’s safeguards using what’s called jailbreaking – in this case, telling Claude that they were conducting security audits on behalf of the targets.

“In this case, what they were doing was pretending to work for legitimate security-testing organizations,” Klein said.

The hackers also built a system to break down each portion of the campaigns, from scanning for vulnerabilities to exfiltrating data, into discrete tasks that didn’t raise alarms, the company said.

Anthropic says that after the attacks, it updated the methods it uses to detect misuse, making it harder for attackers to use Claude to do something similar in the future.

The automated hacks weren’t capable of being fully autonomous, with so-called AI hallucinations leading to mistakes.

“It might say, ‘I was able to gain access to this internal system,’ ” when it wasn’t, Klein said of some of the hacking attempts. “It would exaggerate its access and capabilities, and that’s what required the human review.”

The use of AI agents to conduct attacks puts a spotlight on the dual-use dangers of AI tools. Anthropic has said it hopes to use AI to supercharge cybersecurity defenses. But stronger AI systems also make for stronger attackers.

Anthropic says its strategy is to focus on building skills for its AI that benefit defenders more than attackers, such as known vulnerability discovery.

“These kinds of tools will just speed up things,” said Logan Graham, who runs the Anthropic team that tests for catastrophic risks. “If we don’t enable defenders to have a very substantial permanent advantage, I’m concerned that we maybe lose this race.”